How to install Longhorn on Exoscale SKS

Note

We strongly recommend to use the Exoscale Constainer Storage Interface (CSI) over Longhorn for persistent workloads on SKS. The CSI leverages Exoscale Block Storage and is maintained by Exoscale as an Addon to SKS.

Longhorn is a straightforward-to-use storage solution for Kubernetes. It uses the local storage of instances and makes them highly available as block storage. Kubernetes pods can then automatically derive storage (called Volumes) from Longhorn. Automatic Volume backups can also be created and pushed to Exoscale Simple Object Storage.

Prerequisites

As a prerequisite for this guide, you need:

- The Exoscale cli

kubectlandhelm- Basic Linux knowledge

Along this guide, we will set up a cluster from scratch. Bear in mind that the minimum recommended hardware to run Longhorn is: - 3 nodes - 4 CPUs per node - 4 GiB per node

Additionally, if you would like to use the backup to S3/Exoscale SOS functionality, you also need to: - Create an Object Storage bucket, either via the Portal or the CLI. You can refer to the Simple Object Storage documentation for more details.

- Create an IAM key, with access to the previously created Object Storage Bucket, either via the Portal or the CLI. You can refer to the IAM documentation for more details.

Note

This guide only provides a quick start to deploy Longhorn storage. While it should be suitable for most use cases, Longhorn has many configurable options that must be considered for your specific needs and production environments. Please also note that Longhorn is not maintained by Exoscale, so we can provide only limited support if you encounter issues with this software.

Preparing the cluster

Since Longhorn has the special purpose of providing a storage foundation for other workloads of your cluster, and depending on your use case, it could be rather resource intensive. For this reason, we recommend to deploy it in a dedicated nodepool: performances of both Longhorn and your other workloads will benefit from such isolation since they will not interact each other. Also, we will take the opportunity to set up instances with bigger disks on this dedicated nodepool to reduce storage costs per GB.

In this guide, we are using Calico as CNI plugin. If you want to use Cilium instead, please take a look at the relevant part of our documentation.

First, we need to create the required security groups for Calico :

exo compute security-group create my-cluster

# [Example output]

# ✔ Creating Security Group "my-cluster"... 3s

# ┼──────────────────┼──────────────────────────────────────┼

# │ SECURITY GROUP │ │

# ┼──────────────────┼──────────────────────────────────────┼

# │ ID │ 40f7c9be-a187-499e-b55f-b3e549124199 │

# │ Name │ my-cluster │

# │ Description │ │

# │ Ingress Rules │ - │

# │ Egress Rules │ - │

# │ External Sources │ - │

# │ Instances │ - │

# ┼──────────────────┼──────────────────────────────────────┼

exo compute security-group rule add my-cluster \

--description "NodePort services" \

--protocol tcp \

--network 0.0.0.0/0 \

--port 30000-32767

# [Example output]

# ✔ Adding rule to Security Group "my-cluster"... 3s

# ┼──────────────────┼──────────────────────────────────────────────────────────────────────────────────────────────┼

# │ SECURITY GROUP │ │

# ┼──────────────────┼──────────────────────────────────────────────────────────────────────────────────────────────┼

# │ ID │ 40f7c9be-a187-499e-b55f-b3e549124199 │

# │ Name │ my-cluster │

# │ Description │ │

# │ Ingress Rules │ │

# │ │ fafa4fe1-35ef-49e0-9742-52606d05fbe1 NodePort services TCP 0.0.0.0/0 30000-32767 │

# │ │ │

# │ Egress Rules │ - │

# │ External Sources │ - │

# │ Instances │ - │

# ┼──────────────────┼──────────────────────────────────────────────────────────────────────────────────────────────┼

exo compute security-group rule add my-cluster \

--description "Kubelet API" \

--protocol tcp \

--port 10250 \

--security-group my-cluster

# [Example output]

# ✔ Adding rule to Security Group "my-cluster"... 3s

# ┼──────────────────┼──────────────────────────────────────────────────────────────────────────────────────────────────┼

# │ SECURITY GROUP │ │

# ┼──────────────────┼──────────────────────────────────────────────────────────────────────────────────────────────────┼

# │ ID │ 40f7c9be-a187-499e-b55f-b3e549124199 │

# │ Name │ my-cluster │

# │ Description │ │

# │ Ingress Rules │ │

# │ │ 480ab97a-80eb-4310-8865-525d2701a485 Kubelet API TCP SG:my-cluster 10250 │

# │ │ fafa4fe1-35ef-49e0-9742-52606d05fbe1 NodePort services TCP 0.0.0.0/0 30000-32767 │

# │ │ │

# │ Egress Rules │ - │

# │ External Sources │ - │

# │ Instances │ - │

# ┼──────────────────┼──────────────────────────────────────────────────────────────────────────────────────────────────┼

exo compute security-group rule add my-cluster \

--description "Calico overlay" \

--protocol udp \

--port 4789 \

--security-group my-cluster

# [Example output]

# ✔ Adding rule to Security Group "my-cluster"... 3s

# ┼──────────────────┼──────────────────────────────────────────────────────────────────────────────────────────────────┼

# │ SECURITY GROUP │ │

# ┼──────────────────┼──────────────────────────────────────────────────────────────────────────────────────────────────┼

# │ ID │ 40f7c9be-a187-499e-b55f-b3e549124199 │

# │ Name │ my-cluster │

# │ Description │ │

# │ Ingress Rules │ │

# │ │ 480ab97a-80eb-4310-8865-525d2701a485 Kubelet API TCP SG:my-cluster 10250 │

# │ │ fafa4fe1-35ef-49e0-9742-52606d05fbe1 NodePort services TCP 0.0.0.0/0 30000-32767 │

# │ │ 887e3cb3-2a97-4402-ab03-f73e08ffd870 Calico overlay UDP SG:my-cluster 4789 │

# │ │ │

# │ Egress Rules │ - │

# │ External Sources │ - │

# │ Instances │ - │

# ┼──────────────────┼──────────────────────────────────────────────────────────────────────────────────────────────────┼

Then create the cluster with a default nodepool for your general-purpose workloads:

exo compute sks create my-cluster \

--zone de-fra-1 \

--service-level pro \

--nodepool-name general \

--nodepool-instance-prefix node-gp \

--nodepool-size 3 \

--nodepool-security-group my-cluster

# [Example output]

# ✔ Creating SKS cluster "my-cluster"... 2m9s

# ✔ Adding Nodepool "general"... 3s

# ┼───────────────┼──────────────────────────────────────────────────────────────────┼

# │ SKS CLUSTER │ │

# ┼───────────────┼──────────────────────────────────────────────────────────────────┼

# │ ID │ 3416cf28-e403-4fe8-b82d-bb5a79eefdaa │

# │ Name │ my-cluster │

# │ Description │ │

# │ Zone │ de-fra-1 │

# │ Creation Date │ 2023-10-16 11:25:16 +0000 UTC │

# │ Endpoint │ https://3416cf28-e403-4fe8-b82d-bb5a79eefdaa.sks-de-fra-1.exo.io │

# │ Version │ 1.28.2 │

# │ Service Level │ pro │

# │ CNI │ calico │

# │ Add-Ons │ exoscale-cloud-controller │

# │ │ metrics-server │

# │ State │ running │

# │ Labels │ n/a │

# │ Nodepools │ c08b157a-7438-4845-b1c4-ec0ab45a0ba8 | general │

# ┼───────────────┼──────────────────────────────────────────────────────────────────┼

Finally, we add the dedicated nodepool for Longhorn Pods.

As Longhorn requires at least 4GB of memory and 4 CPUs, we choose the large instance type for this nodepool.

We don’t want non-Longhorn pods to be scheduled on this pool, so we also set a taint: storage=longhorn with the NoSchedule effect.

Since we want Longhorn to use storage from this pool only, we need to label nodes with the node.longhorn.io/create-default-disk

label set to true.

exo compute sks nodepool add my-cluster storage \

--zone de-fra-1 \

--instance-prefix node-sto \

--instance-type large \

--size 3 \

--label=node.longhorn.io/create-default-disk=true \

--taint=storage=longhorn:NoSchedule \

--disk-size 100 \

--security-group my-cluster

# [Example output]

# ✔ Adding Nodepool "storage"... 3s

# ┼──────────────────────┼───────────────────────────────────────────┼

# │ SKS NODEPOOL │ │

# ┼──────────────────────┼───────────────────────────────────────────┼

# │ ID │ 345ad947-2a45-4f0e-89a5-e83b8696708c │

# │ Name │ storage │

# │ Description │ │

# │ Creation Date │ 2023-10-16 11:27:23 +0000 UTC │

# │ Instance Pool ID │ 803625c9-f2b5-45f5-b960-c4529ae23cc8 │

# │ Instance Prefix │ node-sto │

# │ Instance Type │ large │

# │ Template │ sks-node-1.28.jammy │

# │ Disk Size │ 100 │

# │ Anti Affinity Groups │ n/a │

# │ Security Groups │ my-cluster │

# │ Private Networks │ n/a │

# │ Version │ 1.28.2 │

# │ Size │ 3 │

# │ State │ running │

# │ Taints │ storage=longhorn:NoSchedule │

# │ Labels │ node.longhorn.io/create-default-disk:true │

# │ Add Ons │ n/a │

# ┼──────────────────────┼───────────────────────────────────────────┼

Now, we generate a kubeconfig file and set it as default for the current shell session, using the KUBECONFIG environment

variable:

exo compute sks kubeconfig my-cluster admin \

--zone de-fra-1 \

-g system:masters \

-t $((86400 * 7)) > $HOME/.kube/my-cluster.config

export KUBECONFIG="$HOME/.kube/my-cluster.config"

You can quickly check the status of your cluster:

kubectl get nodes

# [Example output]

# NAME STATUS ROLES AGE VERSION

# node-gp-f412c-hqtda Ready <none> 86s v1.28.2

# node-gp-f412c-iatud Ready <none> 86s v1.28.2

# node-gp-f412c-ktnwa Ready <none> 87s v1.28.2

# node-sto-488d1-dbfgc Ready <none> 47s v1.28.2

# node-sto-488d1-hhopc Ready <none> 46s v1.28.2

# node-sto-488d1-srugi Ready <none> 51s v1.28.2

We now have a cluster with 2 nodepools. Everything is in place to deploy Longhorn.

Deploying Longhorn

We will follow the official documentation on deploying with Helm, with a few adaptations to add taints, tolerations, and node selectors to schedule Longhorn on the dedicated storage nodepool.

Initialize the Helm chart repo:

helm repo add longhorn https://charts.longhorn.io

# ...

helm repo update

# [Example output]

# Hang tight while we grab the latest from your chart repositories...

# ...Successfully got an update from the "longhorn" chart repository

# Update Complete. ⎈Happy Helming!⎈

The nodes where we want to deploy Longhorn are tainted with storage=longhorn:NoSchedule.

Additionally, we set the “create default disk on labeled nodes” option.

We can deploy the Helm chart, with these settings:

helm upgrade --install longhorn longhorn/longhorn \

--namespace longhorn-system \

--create-namespace \

--version 1.5.1 \

--set "defaultSettings.createDefaultDiskLabeledNodes=true" \

--set "defaultSettings.taintToleration=storage=longhorn:NoSchedule" \

--set "longhornManager.tolerations[0].key=storage" \

--set "longhornManager.tolerations[0].operator=Equal" \

--set "longhornManager.tolerations[0].value=longhorn" \

--set "longhornManager.tolerations[0].effect=NoSchedule"

It will take between 3 and 5 minutes to get Longhorn deployed. You can check the progress with (Ctrl+C to exit):

kubectl get pods \

--namespace longhorn-system \

--watch

When all pods from the longhorn-system namespace have the status Running, Longhorn is supposed to be fully ready to use.

Accessing the Longhorn UI

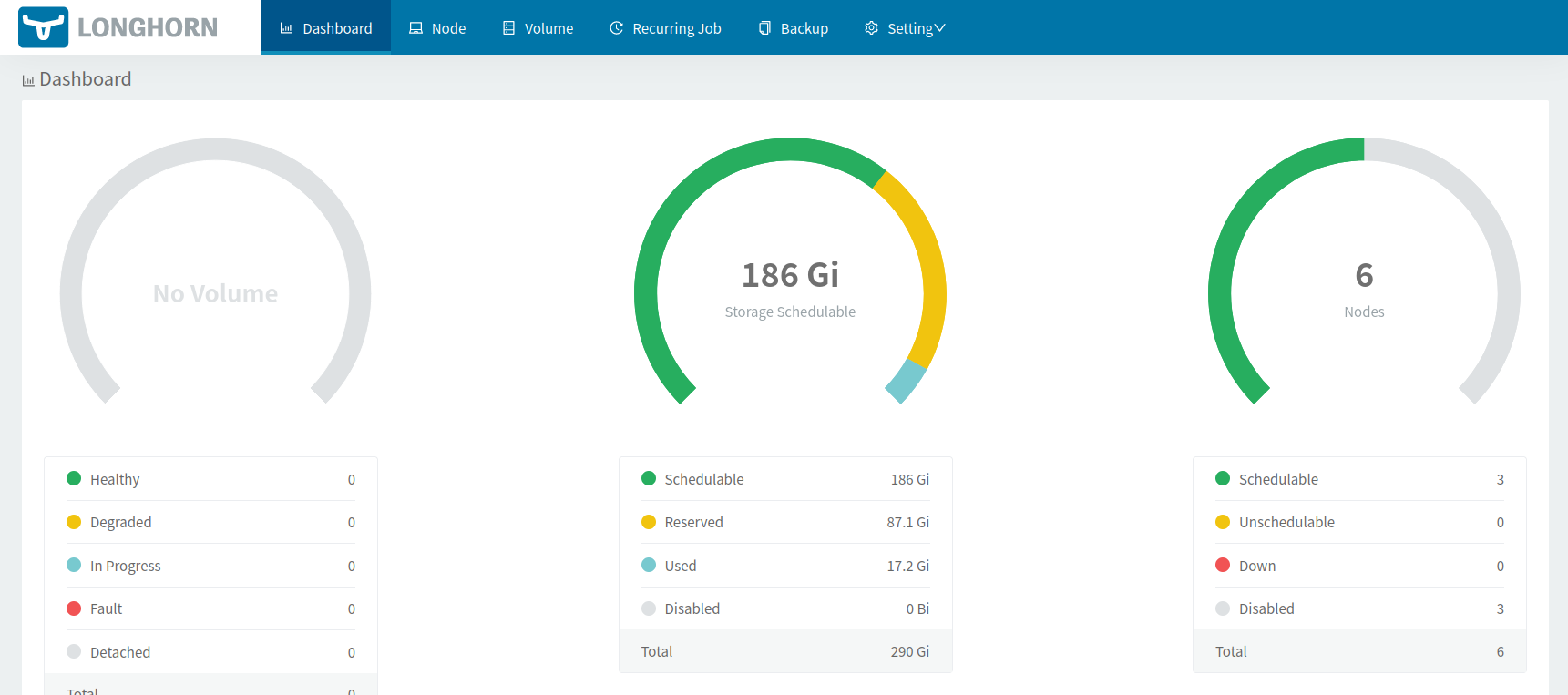

The web UI provides a way to view information about the cluster (like usage of storage). Also, it enables further configuration of Volumes.

By default, the UI is only available from the internal Kubernetes network. Use port-forwarding to access it:

kubectl port-forward deployment/longhorn-ui 7000:8000 -n longhorn-system

This will open the port 7000 locally and connect it directly to port 8000 of the dashboard container.

You can access the interface at http://127.0.0.1:7000.

Using Longhorn Volumes in Kubernetes

Creating a persistent volume claim

To use storage, you need a PersistentVolumeClaim (PVC), which can claim storage. A PVC is comparable to a pod, except a pod claims computing resources like CPU and RAM instead of storage.

This YAML manifest defines such a PVC, which would request 2 GB of storage. The default storage class (longhorn) replicates the volume 3 times on different nodes in the cluster.

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: example-pvc

spec:

accessModes:

- ReadWriteOnce

storageClassName: longhorn

resources:

requests:

storage: 2Gi

Note

This will create a volume with an ext4 filesystem (the default). Consider this Block-Volume example if your application or database supports RAW devices.

Note

You can also use a custom storage class if you want to modify parameters like replication count, or if you want to enable scheduled snapshots or backups.

Linking the Persistent Volume Claim with a pod

A pod in the Kubernetes world consists of one or more containers. Such a pod can mount a PVC by referring to its name (in this case example-pvc).

In the following example, we create a pod using an Ubuntu container image and mount the storage defined above in /data:

apiVersion: v1

kind: Pod

metadata:

name: pod-test

spec:

containers:

- name: container-test

image: ubuntu

imagePullPolicy: IfNotPresent

command:

- "sleep"

- "604800"

volumeMounts:

- name: volv

mountPath: /data

volumes:

- name: volv

persistentVolumeClaim:

claimName: example-pvc

You can save each configuration into a .yaml file, and apply them with kubectl apply -f yourfilename.yaml.

Check the status of your pod and PVC with kubectl get pod and kubectl get pvc.

The Ubuntu container to sleep for a long time, enabling you to run commands inside of it and check its status.

Soon after deploying the example code, you can see that a volume was created in the Longhorn UI when you click on the Volume tab. The created volume will be attached to the Ubuntu pod and replicated 3 times.

To test your storage, you can attach your console to the shell of the pod by executing kubectl exec -it pod-test -- /bin/bash

and then write something into the /data folder.

Even if you delete or kill the pod (for example, via kubectl delete pod pod-test), you can reapply the pod via kubectl

to see that the data written into /data is sustainably available.

Setting a storageclass as default

Longhorn comes with the storageclass named longhorn, which defines:

- the number of replicas

- backup schedule

- further properties

- that a potentially requested volume is a Longhorn-Volume.

Note: you can also read about the custom storage class example in the Longhorn documentation fore more details.

You might want to configure this (or your custom storageclass, if applicable) as the default storageclass in your cluster, so you can also directly provision helm packages which depend on volumes.

There are two ways to set your default storageclass: you can modify the longhorn.yaml and reapply it, or you can issue this command at any time after installing Longhorn:

kubectl patch storageclass longhorn -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}'

Configuring Backup to Exoscale SOS

Longhorn has the ability to backup your volumes to S3/Exoscale Simple Object Storage. These backups can be done manually or on a schedule. It will also automatically detect any backups already present.

Configuring the access secret

You need the following data (check the links in the Prerequisites section above for links):

-

A IAM-Key Key and secret pair that provides access to this bucket.

-

The S3 endpoint of the zone of your bucket. It has the format

https://sos-ZONE.exo.io. Make sure to substituteZONEwith the relevant zone.

Note

You can find all Exoscale zones on

our website or with the CLI

command: exo zones list.

Convert the Zone-URL and the IAM-Pair to Base64 (replace DATA in the commands below).

- On Linux/Mac:

echo -n "DATA" | base64 - On Windows (inside Powershell):

$pwd =[System.Text.Encoding]::UTF8.GetBytes("DATA")[Convert]::ToBase64String($pwd)

Write down the following manifest in a new .yaml file, respectively fill in the values of the 3 required fields in the data section.

apiVersion: v1

kind: Secret

metadata:

name: exoscale-sos-secret

namespace: longhorn-system

type: Opaque

data:

# Zone in base64

AWS_ENDPOINTS: aHR0cHM6Ly9zb3MtYXQtdmllLTEuZXhvLmlv # https://sos-at-vie-1.exo.io

AWS_ACCESS_KEY_ID: YourBase64AccessKey # access key for the bucket in base64

AWS_SECRET_ACCESS_KEY: YourBase64SecretKey # secret key for the bucket in base64

Apply the manifest with the command kubectl apply -f FILENAME.yaml. The command will create a new secret with the name exoscale-sos-secret in the cluster which can provide Longhorn access to the bucket.

Configuring the Backup Destination in Longhorn

Open the Longhorn UI, and go to the Tab Settings. Then General, and scroll down to the Backup section.

Complete the Backup Target using this format:

s3://BUCKETNAME@ZONE/

In this example, s3://my-cool-bucket@at-vie-1/ is for a bucket called my-cool-bucket in the at-vie-1 (Vienna) zone.

In the Backup Target Credential Secret field add exoscale-sos-secret. This is the name of the created secret.

Scroll down and click Save to apply your configuration.

Then go to the Volume tab. If the Longhorn UI does not display any errors, the connection is successful.

If you already created a volume, you can test backing it up manually. Click on the respective volume in the Volume tab. Then click on Create Backup.

Further links

SKS Nodepool taints documentation

Longhorn:

- Working with taints and tolerations

- Tip: Set Longhorn To Only Use Storage On A Specific Set Of Nodes

Read the full Longhorn documentation.

See the latest Longhorn releases.